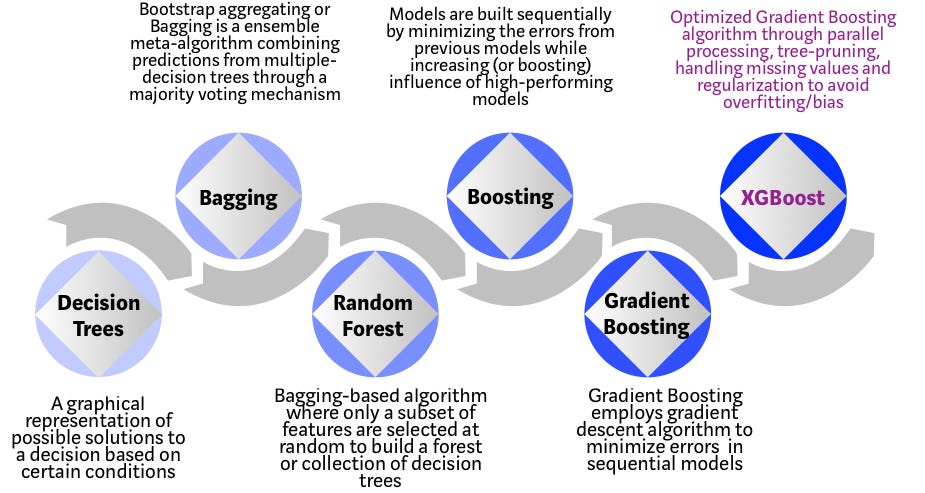

XGBoost stands for Extreme Gradient Boosting where the term Gradient Boosting originates from the paper Greedy Function Approximation. A Gradient Boosting Machine by Friedman.

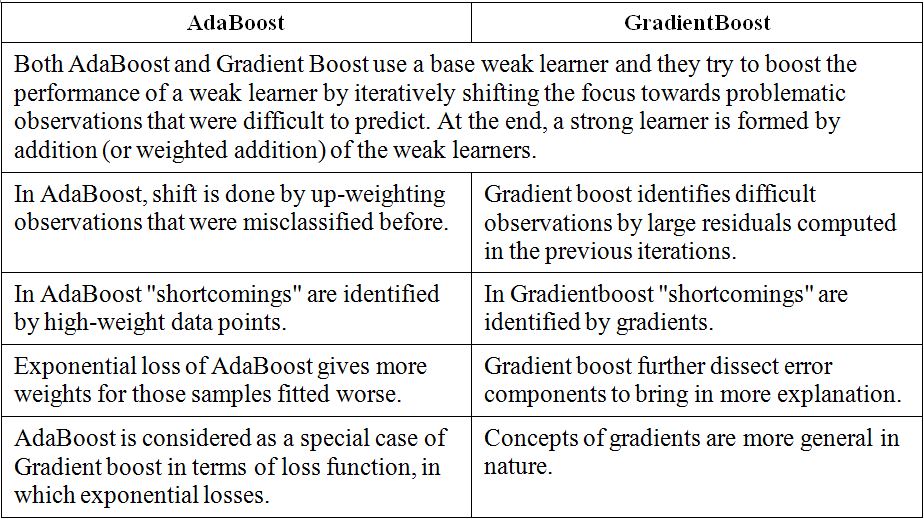

Comparison Between Adaboosting Versus Gradient Boosting Statistics For Machine Learning

ML - Gradient Boosting.

. In addition Chen Guestrin introduce shrinkage ie. The validation set it seems to me is kind of an intermediary part that tweaks the training set. XGBoost and other gradient boosting machine routines too has a number of parameters that can be tuned to avoid over-fitting.

20 May 19. XGBoost is an implementation of gradient boosted decision trees designed for speed and performance. XGBoost is short for eXtreme Gradient Boosting package.

The ratio of features used ie. Its intention is to improvefine tune it. XGBoost solves the problem of overfitting by correcting complex models with regularization.

So let me try to say this in laymans terms. Gradient Boosting Neural Networks. XgBoost stands for Extreme Gradient Boosting which was proposed by the researchers at the University of Washington.

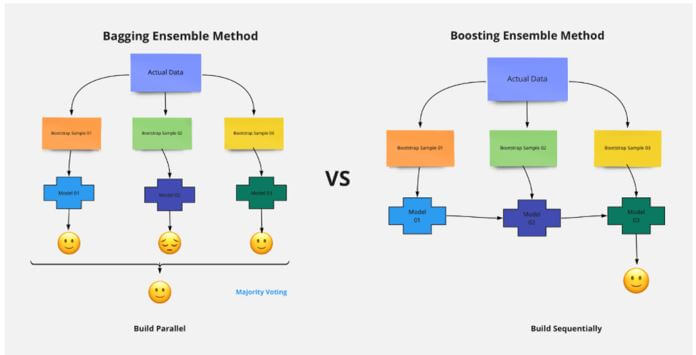

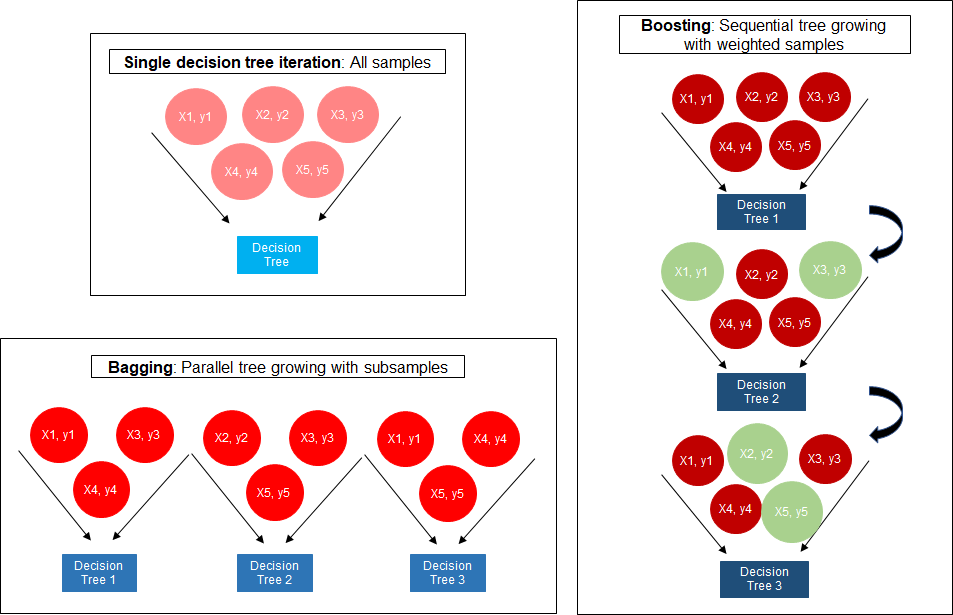

It is a library written in C which optimizes the training for Gradient Boosting. Regularized Gradient Boosting is also an. Bagging vs Boosting in Machine Learning.

ML XGBoost eXtreme Gradient Boosting 19 Aug 19. I will mention some of the most obvious ones. Difference between Batch Gradient Descent and Stochastic Gradient Descent.

If it is set to 0 then there is no difference between the prediction results of gradient boosted trees and XGBoost. XGBoost is an algorithm that has recently been dominating applied machine learning and Kaggle competitions for structured or tabular data. Still a bit hung up on difference between validation and test.

So that if we had a 60. A learning rate and column subsampling randomly selecting a subset of features to this gradient tree boosting algorithm which allows further reduction of overfitting. They both are used to provide and unbiased evaluation of a model fit one though the test is a FINAL model fit.

Before understanding the XGBoost we first need to understand the trees especially the decision tree. The purpose of this Vignette is to show you how to use XGBoost to build a model and make predictions. XGBoost also comes with an extra randomization parameter which reduces the correlation between the trees.

For example we can change. Lower ratios avoid over-fitting. The gradient boosted trees has been around for a while and there are a lot of materials on the topic.

It therefore adds the methods to handle. This tutorial will explain boosted trees in a self-contained and principled way using the. Two solvers are included.

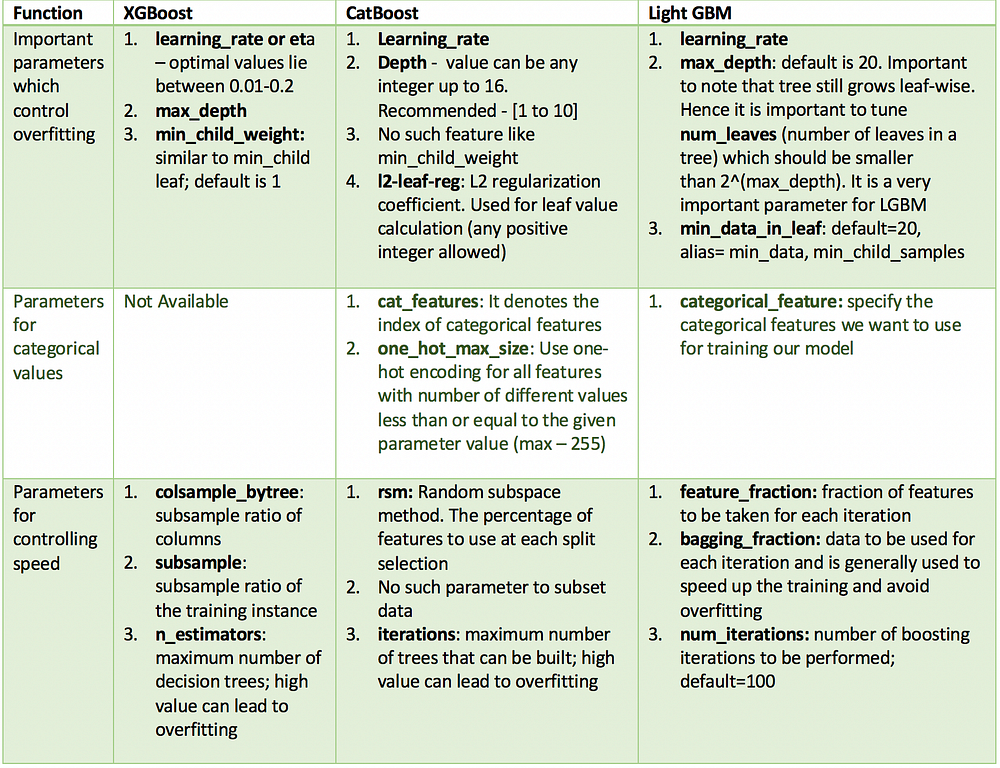

LightGBM vs XGBOOST - Which algorithm is better. It is an efficient and scalable implementation of gradient boosting framework by friedman2000additive and friedman2001greedy. Less correlation between classifier trees translates to better performance of the ensemble of classifiers.

In this post you will discover XGBoost and get a gentle introduction to what is where it came from and how you.

How To Choose Between Different Boosting Algorithms By Songhao Wu Towards Data Science

Random Forest Vs Xgboost Top 5 Differences You Should Know

The Structure Of Random Forest 2 Extreme Gradient Boosting The Download Scientific Diagram

Mesin Belajar Xgboost Algorithm Long May She Reign

The Ultimate Guide To Adaboost Random Forests And Xgboost By Julia Nikulski Towards Data Science

Catboost Vs Light Gbm Vs Xgboost Kdnuggets

The Intuition Behind Gradient Boosting Xgboost By Bobby Tan Liang Wei Towards Data Science

0 comments

Post a Comment